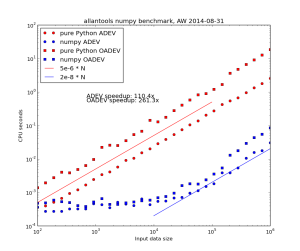

I wrote a first rough (and very slow!) implementation of Modified Total Deviation mtotdev() for allantools.

mtotdev() combines the features of modified Allan deviation mdev() (being able to distinguish between white and flicker phase modulation) and Total deviation totdev() (better confidence intervals at large tau).

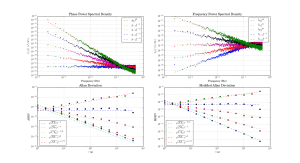

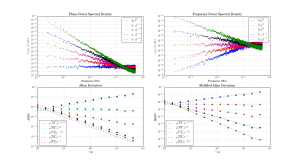

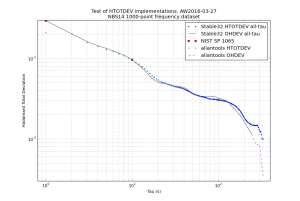

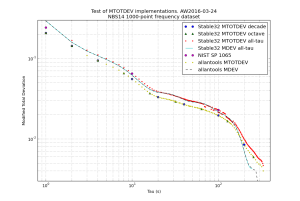

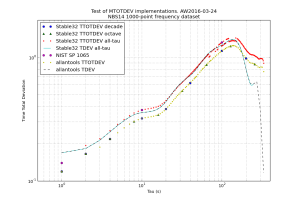

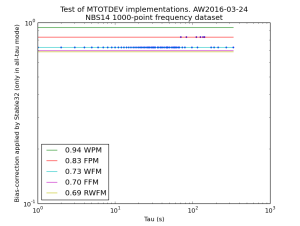

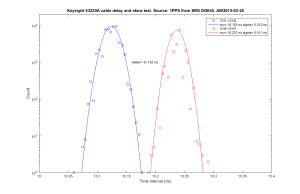

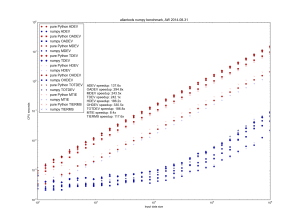

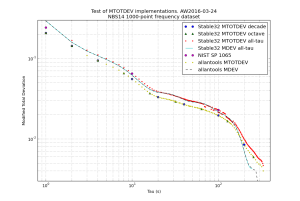

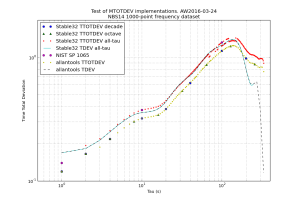

Here are some results. The Time Total Deviation ttotdev() follows trivially from this work also, since it is only a scaled version of mtotdev(). I used the "NBS14" 1000-point frequency dataset and compared my results against Stable32 and those in NIST SP 1065 (Table 31, page 108).

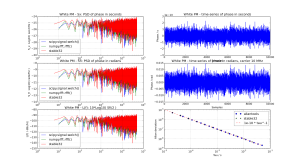

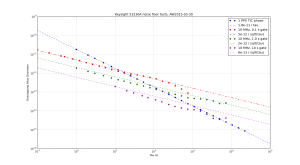

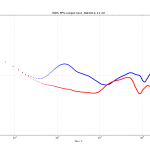

The figures show mtotdev() and ttotdev() from Stable32 runs and allantools. I've also added mdev() and tdev() traces to compare against. At first sight this looks strange, but mtotdev() is a biased estimator, and Stable32 applies a bias correction which explains the results.

Somewhat surprisingly Stable32 applies a bias-correction only when run in the "all-tau" mode. These numbers agree with those from the NIST SP 1065 table. For this dataset Stable32 is undecided on what power-law the dataset follows at large tau, which results in deviations that jump up and down (because a different bias-correction is applied at different tau, red datapoints).

When Stable32 is run in "octave-tau" or "decade-tau" mode no bias correction is applied. These numbers agree with the ones from allantools.mtotdev().

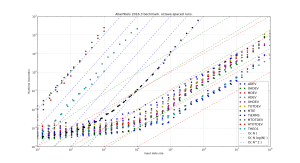

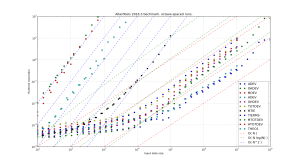

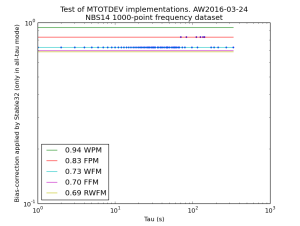

This figure shows the ratio of variances between allantools.mtotdev() (no bias correction) and a Stable32 all-tau run (bias correction applied). The lines correspond to the bias-correction values for different noise processes.

There also seems to be a misprint in NIST SP 1065 equation (28) page 26, where it says ttotdev() is scaled by tau-cubed (when tau-squared is correct). Who reads these things anyway - not many it seems 😉