As I'm very much an amateur programmer with not too much time to learn new stuff I've decided my CAM-algorithms are going to be written in Python (don't hold your breath, they'll be online when they'll be online...). The benefits of rapid development will more than outweigh the performance issues of Python at this stage.

But then I found Mark Dufour's project shedskin (see also blog here and Mark's MSc thesis here), a Python to C++ compiler! Can you have the best of both worlds? Develop and debug your code interactively with Python and then, when you're happy with it, translate it automagically over to C++ and have it run as fast as native code?

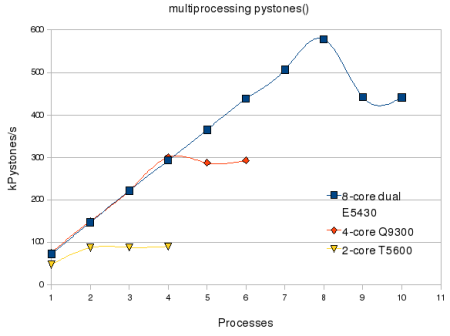

Well, shedskin doesn't support any and all python constructs (yet?), and only a limited number of modules from the standard library are supported. But still I think it's a pretty cool tool. For someone who doesn't look forward to learning C++ from the ground up typing 'shedskin -e myprog.py' followed by 'make' is just a very simple way to create fast python extensions! As a test, I ran shedskin on the pystone benchmark and called both the python and c++ version from my multiprocessing test-code:

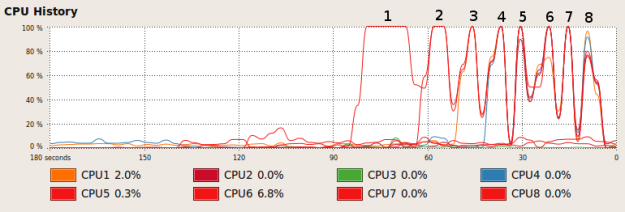

Python version

Processes Pystones Wall time pystones/s Speedup

1 50000 0.7 76171 1.0X

2 100000 0.7 143808 1.9X

3 150000 0.7 208695 2.7X

4 200000 0.8 264410 3.5X

5 250000 1.0 244635 3.2X

6 300000 1.2 259643 3.4X

'shedskinned' C++ version

Processes Pystones Wall time pystones/s Speedup

1 5000000 2.9 1696625 1.0X

2 10000000 3.1 3234625 1.9X

3 15000000 3.1 4901829 2.9X

4 20000000 3.4 5968676 3.5X

5 25000000 4.4 5714151 3.4X

6 30000000 5.1 5890737 3.5X

A speedup of around 20x.