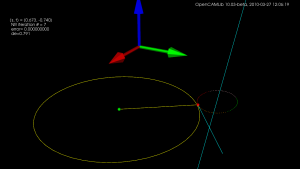

For exploring and visualizing various pocketing or area-clearing milling toolpath strategies I am thinking about reviving the "pixel-mowing" idea from 2007. This would be simply a bitmap with different color for stock remaining and already cleared area. It would be nice to log and/or plot the material removal rate (MRR), or the cutter engagement angle. Perhaps graph it next to the simulation, or create a simulated spindle-soundtrack which would e.g. have a pitch proportional to the inverse of the MRR. It should be possible to demonstrate how the MRR spikes at sharp corners with classical zigzag and offset pocketing strategies. The solution is some kind of HSM-strategy where the MRR is kept below a given limit at all times.

My plan is to play with maximally-inscribed-circles, which are readily available from the medial-axis, like Elber et. al (2006).

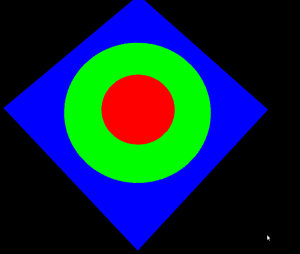

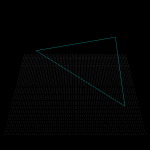

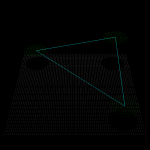

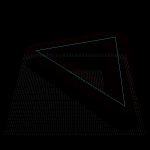

Anyway the first step is to render a bitmap in VTK, together with the tool/toolpath, and original pocket geometry:

import vtk import math vol = vtk.vtkImageData() vol.SetDimensions(512,512,1) vol.SetSpacing(1,1,1) vol.SetOrigin(0,0,0) vol.AllocateScalars() vol.SetNumberOfScalarComponents(3) vol.SetScalarTypeToUnsignedChar() scalars = vtk.vtkCharArray() red = [255,0,0] blue= [0,0,255] green= [0,255,0] for n in range(512): for m in range(512): x = 512/2 -n y = 512/2 -m d = math.sqrt(x*x+y*y) if ( d < 100 ): col = red elif (d<200): col = green else: col = blue for c in range(3): scalars.InsertTuple1( n*(512*3) + m*3 +c, col[c] ) vol.GetPointData().SetScalars(scalars) vol.Update() ia = vtk.vtkImageActor() ia.SetInput(vol) ia.InterpolateOff() ren = vtk.vtkRenderer() ren.AddActor(ia) renWin = vtk.vtkRenderWindow() renWin.AddRenderer(ren) iren = vtk.vtkRenderWindowInteractor() iren.SetRenderWindow(renWin) interactorstyle = iren.GetInteractorStyle() interactorstyle.SetCurrentStyleToTrackballCamera() camera = vtk.vtkCamera() camera.SetClippingRange(0.01, 1000) camera.SetFocalPoint(255, 255, 0) camera.SetPosition(0, 0.1, 500) camera.SetViewAngle(50) camera.SetViewUp(1, 1, 0) ren.SetActiveCamera(camera) iren.Initialize() iren.Start() |